Generating Mozart

LSTM trained to output music

The neural net successfully learned to generate Mozart!

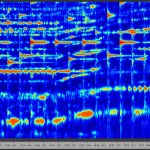

This week I trained an LSTM to predict the next step of a musical piece (given the previous 120 time steps). After training is completed, I then use the model to generate new musical patterns. I ask the model to predict a step (either prompted by silence, or by an introductory Mozart clip), then I feed that directly back into the LSTM and ask it to predict the next step. I continue this loop for several time steps. In the examples above, the first generation was prompted by silence, and the second by a Mozart clip from the validation set.

I don’t recognize the generated pieces offhand, but I do suspect the model has overfit to the Mozart training data, and is predicting the notes to already-existing works (add a comment below if you can name them!). I’m still very excited by the results – for each time step I’m taking the model’s best guess, but I can also sample some of the slightly less likely guesses, as a way of introducing some randomness without moving too far away from what would be Mozart’s style.

I’ve included above an example of an actual Mozart clip which I use as training data. I’m using midi files, which I transform into a numerical array. Here they’ve been translated back to midi, and then to wav format for display on this blog. Unfortunately in this process, I lose any information about dynamics (how loud or soft the notes should be), and any slight variations in timing. Clearly both the training data and generated output will sound more convincing if performed by an actual pianist, since the pianist can make expressive but subtle timing changes, and would choose to emphasize some notes while keeping others in the background.

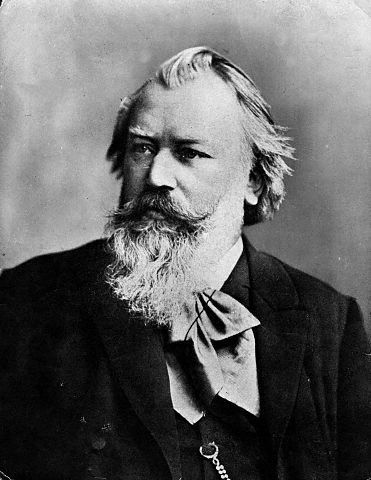

Imitating Romantic Period Piano Music

Training on Brahms, Schumann, and Rachmaninoff

Mozart composed his music in the late 1700s, when the piano keyboard range was smaller, and when harmonies were generally simpler and more predictable. Later composers such as Brahms, Schumann, and much later Rachmaninoff, were part of a Romantic music tradition. Here the piano had its full 88 keys, and musical structure became increasingly complex. Still, unlike later modern classical music, even an untrained listener can hear recognizable harmonies and patterns, and the notes don’t sound random.

I wanted to see how my model would handle the complexities of romantic music, and so I next trained on a corpus of Brahms, Schumann, and Rachmaninoff piano music (all drawn from the midi files available at Classical Archives). I find these results to be very fascinating and promising – even though the outputs aren’t as clear and polished, the model creates harmonies that are much more elaborate and varied, and it is predicting patterns that are reasonable (and as a pianist, I hear patterns that are distinctly unique to each composer).

I’ll describe my methods in more detail in a future blog post. Right now I’m experimenting with several new ideas (I’m using MIT’s music21 library to handle the midi files, but otherwise I’ve built these models from scratch). I’m still deep in the process of exploring what works well and what is in the way.

Upcoming

Moving forward, I’m going to continue to fine tune this project. I’ll try using a transformer architecture rather than this LSTM model. I’m also going to experiment with the way I transfer from midi to numerical array and back again. I’ll work on collecting more data and on pushing the Mozart generator to create increasingly varied patterns. I’m also interested to try generating music with multiple instruments (probably piano and violin, or else string trio).