Clara: A Neural Net Music Generator

Project Overview

Clara is an LSTM that composes piano music and chamber music. It has some parallels to Google’s Magenta project, although it’s an entirely separate project, and uses PyTorch, MIT’s music21, and the FastAI library. I use a 62 note range (instead of the full 88-key piano), and I allow any number of notes to play at each musical time step (in contrast with many generation models which insist on having always exactly 4 notes at a time, or in having much smaller note ranges). I trained my models using midi samples from Classical Archives, although the code should work for any piano midi files, as well as for many chamber music files.

The code is available here. In particular, take a look at BasicIntro.ipynb, a Jupyter Notebook where you can create your own musical generations with my pretrained model.

A more detailed write-up of this project is available here. Try guessing which samples are human and which are AI here.

Midi to Text Encoding

One of the most important factors in the success of the musical generations turned out to be my choice of encoding the midi files into text formats. The midi files provide the timing of when each note starts and stops (it also contains information about the instrument, the volume, and tempo markings for the piece). These note events can happen at arbitrary times, there can be any number of notes playing at once. You can read more about midi file format here.

Sample Frequency

Music often has a quarter note as a basic measurement of time. It is very common to see notes played 3 times or 4 times per quarter note (triplets, or 16ths, respectively). Because of this, I sample the midi files either 12 times per quarter note (to allow for both triplets and 16th to be encoded faithfully), or 4 times per quarter note (triplets are distorted slightly, but it makes it easier for a model to learn a longer scale musical pattern). The user can also specify a different sampling frequency.

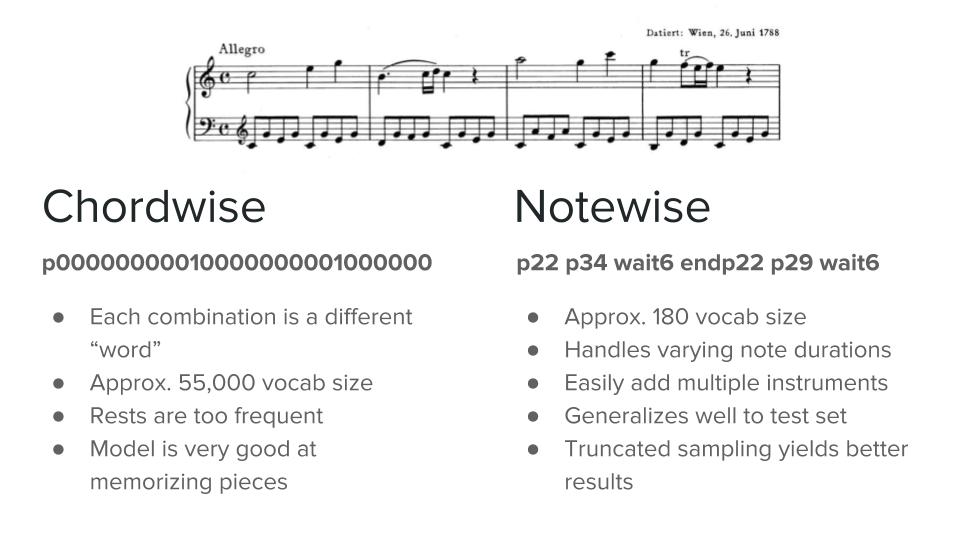

Chordwise vs Notewise

One option would be to ask the model to predict yes/no for each of the 88 piano keys, at every musical time step. However, since each individual key is silent for the great majority of the time, it would be very difficult for a model to learn to ever risk predicting “yes” for a note. I offer two different possible solutions for this problem. In language models, we often work at either the word-level or character-level. Similarly, for music generation, I offer either chordwise or notewise levels.

For chordwise, I consider each combination of notes that are ever seen in the musical corpus to be a chord. There are usually on the order of 55,000 different chords (depending on the note range and the number of different composers). Then I treat the chord progression exactly as a language. In the same way a language model might predict “cat” when prompted with “The large dog chased the small …”, I ask the musical model to predict the next chord when prompted with a chord sequence. Note that a “chord” is used generally here – it is any combination of notes played all at one time, not necessarily a traditional musical chord (such as “C Major”).

For notewise, I consider each note start and stop to be a different word (so the words might be “p28” and “endp28” for the start and stop of note #28 on the piano). I then use “wait” to denote the end of a time step. In this way, the vocab size is around 180.

Chamber Music

The notewise representation lends itself relatively easily to adding in multiple instruments. The main difference is in encoding the midi files. Here, I mark violin notes with the prefix “v” and piano notes with the prefix “p”, but otherwise train the music generator in the same way. One problem is there are much fewer violin/piano pieces than pure piano solo pieces. I include any pieces that are a solo instrument and piano (such as cello/piano, horn/piano), and I transpose the solo instrument parts to be within the violin range. In the future I may explore ways to use the piano solo music to pretrain the network.

Data Augmentation

For all the encodings, I also transpose all the pieces into every possible key. The test set is kept separate: a piece in the test set cannot be a transposition of a piece in the training set.

Musical Generator

I used an AWD-LSTM network from the FastAI library as the generator model. In train.py, one can easily modify the hyperparameters (changing the embedding size, number of LSTM layers, amount of dropout, etc). I train the model by asking it to predict the next note or chord, given an input sequence.

Once the model is trained, I create generations by sampling the output prediction, and then feeding that back into the model, and asking it to predict the next step, and so forth. In this way, generated samples can be of arbitrary length. I achieved much better results by not always sampling the most likely prediction by the model. With an adjustable frequency, I sometimes select the 2nd or 3rd most likely guesses (according to the probabilities predicted by the model). In this way, the generations are more diverse, and a single prompt can produce a large variety of samples.

Results

Here are some samples from notewise and chordwise piano solo generations, and from notewise chamber music generation.

Here are some benefits and disadvantages I found in using the two methods:

Chordwise representation:

- Able to recreate 45 seconds of Mozart

- Better long scale patterns

- Does not generalize well beyond the training set

- More variety in quality of generations

- Usually most effective to sample top prediction at each timestep

- Odd effect where it will occasionally “jump” to a neighboring key, but otherwise continue playing the passage normally

Notewise representation:

- Creates musical sounding samples even when prompted with music it has never seen before

- Handles a 12-step sample frequency well, which allows it to generate both 16th and triplets smoothly

- Able to create small-scale rhythmic patterns that are musically coherent

- Does not create long scale patterns well

- Easily extends to chamber music

- Easily handles note durations (particularly important for violin, which often holds notes much longer than piano)

- Usually most effective to sample from the top 3 predictions at each timestep

Critic and Composer Classifier

I created a critic model that attempts to classify whether a sample of music is human composed or neural net composed. “Real” samples come from the original human-composed midi files (encoded into text files). “Fake” samples are created by the generator LSTM.

This can be used as a way to score musical generations. (This is quite similar to a GAN, but I don’t use the scores as a target for training the generator.) In this way, I can select the best (or worst!) samples from a batch created by the generator.

I also created a composer model that attempts to classify which human composer created the music sample. Training samples come from the original human-composed midi files (again encoded into text file).

This can be used to create an Inception-like score for the models. Models score highly when each individual generation is very much like a specific human composer, and when on average there are generations that match all of the different human composers. A model would score poorly for generating only pieces like Chopin, and never generating ones like any of the other humans.

Alternately, it is interesting to combine these two model scores. Consider a sample that the Critic considers “real”, but which the Composer Classifier thinks don’t match any human composer: this might sound like a new musical style.

Future Directions

There are many possible directions for this project. In particular, I’m interested in the problem of creating pieces that have a longer scale coherency or structure. I suspect there may be a way to combine the chordwise and notewise encodings, or to otherwise to first have a model that predicts a full measure at a time, and then a second model that fleshes that measure prediction encoding into individual notes.

Using the Musical Inception score as a metric, I would like to do a more scientific search for the ideal hyperparameters and encoding choices, training several models in parallel.

Using the Composer Classifier, I’m also working on creating an audio version of Google’s DeepDream, adjusting the input music sample to look more like any of the individual human composers.

Thanks

I’ve had an amazing summer as part of OpenAI’s Scholars Program. I want to say a special thanks to my brilliant mentor Karthik Narasimhan (who is starting this fall as an assistant professor at Princeton!), to the other scholars (I’m proud to be part of such a talented, kind, and inspiring group!), to the OpenAI team as a whole, and in particular to Larissa Schiavo who organized the scholars program, and who did a great job keeping us on track and making us feel welcome.

-

[…] Final Blog Post Final Project Github Repo […]

[…] Christine Payne’s talk at OpenAI Scholars Demo Day, 9/20/2018. You can read more about her final project here: http://christinemcleavey.com/clara-a-neural-net-music-generator/ […]

[…] famous OpenAI research lab. In her most recent OpenAI project, she used fastai to help her create Clara: A Neural Net Music Generator. Here is some of her generated chamber music. Christine […]

[…] famous OpenAI research lab. In her most recent OpenAI project, she used fastai to help her create Clara: A Neural Net Music Generator. Here is some of her generated chamber music. Christine […]

[…] Research Fellow Christine McLeavey Payne shared her research on a neural net music generator named Clara. Payne says it took her only two weeks to build the music generator and get initial results using […]

[…] McLeavey Payne may have finally cured songwriter’s block. Her recent project Clara is a long short-term memory (LSTM) neural network that composes piano and chamber music. Just give […]

Leave a CommentYou must be logged in to post a comment.

wow Christine! You became so good so fast. It wasn’t too long ago that when on Coursera Deep Learning that you were a new mentor

Thank you, that’s really nice to hear! I love Andrew Ng’s Coursera sequence & learned so much from mentoring. It’s nice to feel more fluent these days, even though there’s still so much to learn.

OMG you’re mentioned again in Fastai v3 lesson 1

Thanks for letting me know, I hadn’t realized that. I’ve watched both Fastai v1 and v2, I love the course!

This is really cool! ML is growing so fast… I wish Mozart was alive to hear these!

Wow, Christine. This is what I want to do and didn’t know how to start. I really appreciate you share what you used to work on this beauty.